Should we fear AutoML to rule the world of automated machine learning anytime soon?

- Sivasathivel Kandasamy

- Jun 27, 2019

- 3 min read

Automated Machine Learning

Automated machine learning is the process of automating the end-to-end machine learning process. The steps involved in developing machine learning (ML) applications usually include:

data pre-processing

feature engineering

model selection

model tuning

Automated ML aims to automate this process end-to-end.

These processes need seasoned data scientists, with domain knowledge. Such data scientists are in demand and command premium salaries. Automated ML seems to obviate the need for such data scientists [1,2]. This seems to be the major attraction to many of the businesses - pay less get more[3].

AutoML

AutoML is one of the popular frameworks currently, for automated machine learning. Some of the features of AutoML include:

Analytics - the relationship between features and targets

Feature Engineering - around dates

Robust Scaling

Feature Selection

Data Formatting

Model Selection

Hyperparameter Tuning

Ensembling - SubPredictors and Weak Predictors

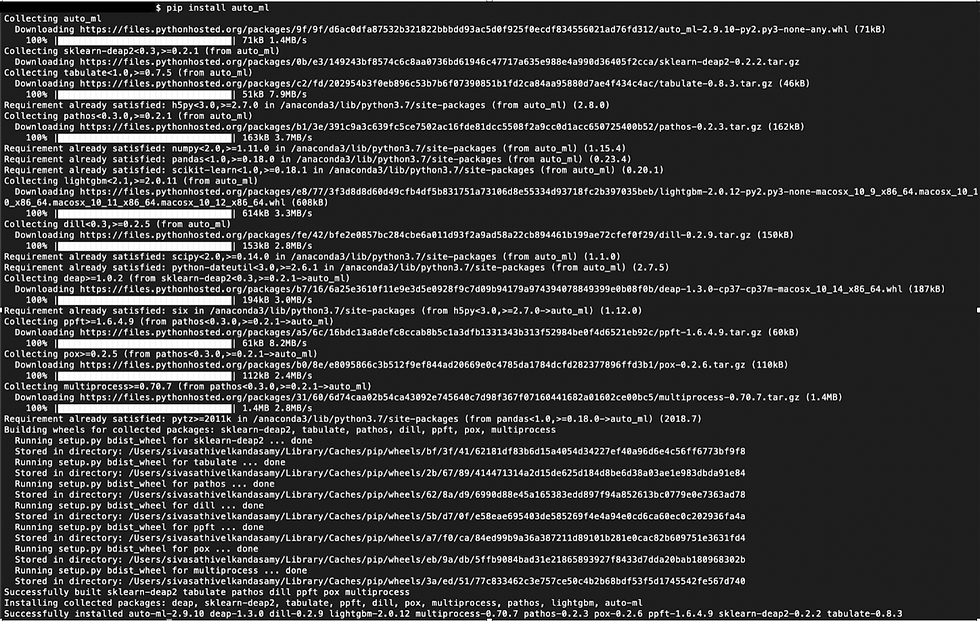

AutoML is a native python library. You can install it using the python package manager:

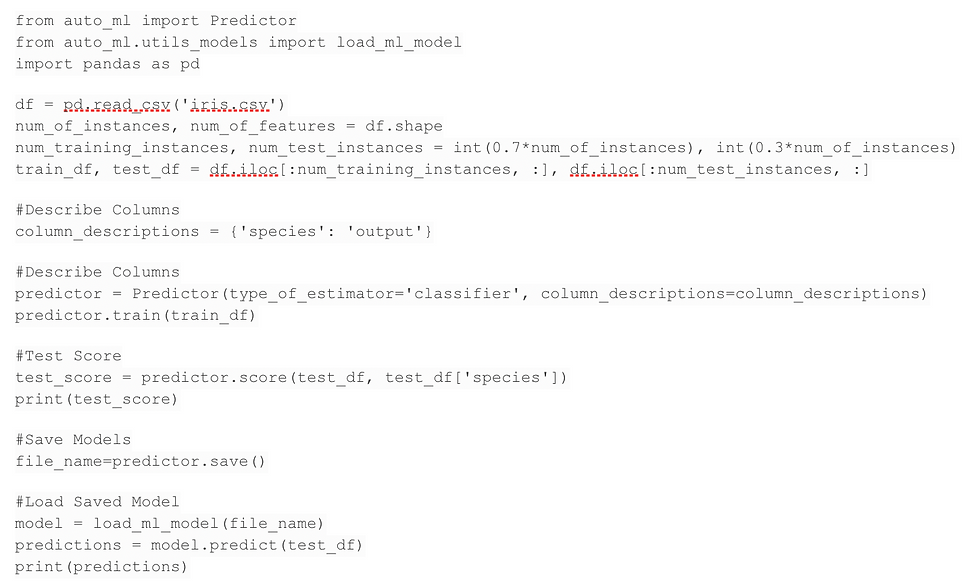

The usage of this framework is also very simple:

A sample training output is given below:

Deep Dive into the AutoML Code

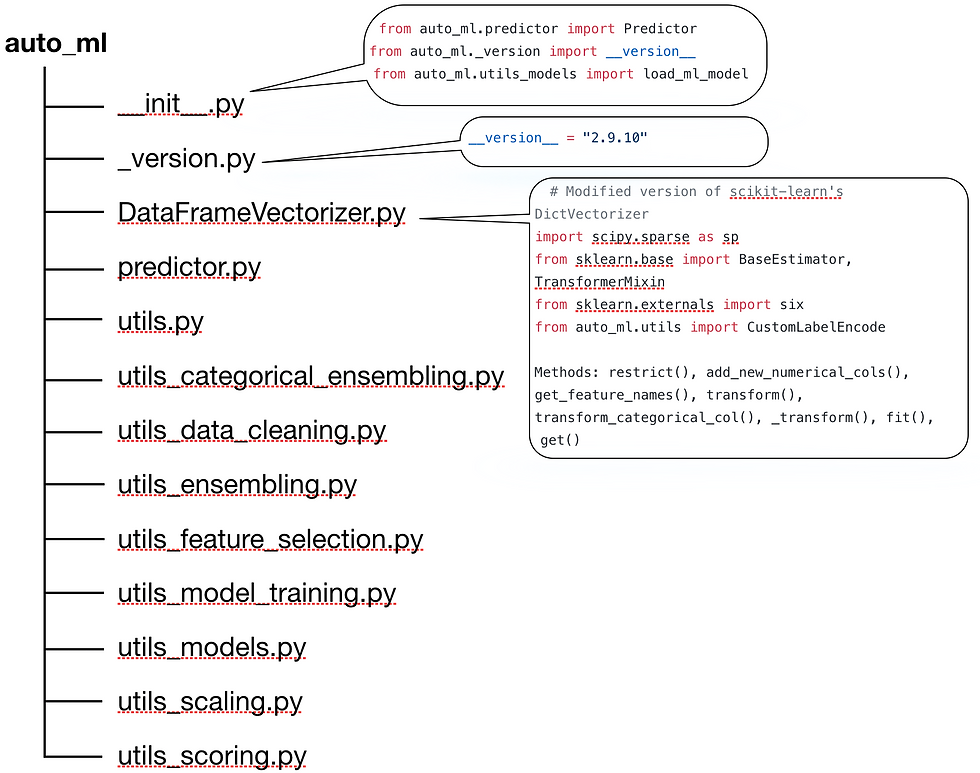

The GitHub repo of the code shows the following python scripts:

The class Predictor is the entry point. It takes in two parameters, the type of estimator and the column description. The first step of the Predictor is to call its method set_params_and_defaults.

set_params_and_defaults first removes all rows in the data frame with missing target values.

To compare different models then set the parameter ‘compare_all_models’ to ‘True’. Then AutoML will select 'RANSACRegressor', 'RandomForestRegressor', 'LinearRegression', 'AdaBoostRegressor', 'ExtraTreesRegressor', and 'GradientBoostingRegressor', for regressions. For classification, AutoML selects 'GradientBoostingClassifier', 'LogisticRegression' and 'RandomForestClassifier' by default. When 'compare_all_models' is not set, AutoML selects 'GradientBoostingRegressor' (regression) and 'GradientBoostingClassifier' (classification)

After this, it creates model pipelines and performs a search for models. The default search algorithms is GridSearchCV. Yet, when the number of combinations seems to be more than 50(magic number!) AutoML uses Evolutionary Algorithm Search with CV (EASCV) by default. AutoML also uses a python multiprocessing module for parallel processing. It doesn’t use EASCV for 'CatBoostClassifier' and 'CatBoostRegressor' as,

“For some reason, EASCV doesn't play nicely with CatBoost. It blows up the memory hugely, and takes forever to train”.

While I can go line by line of the code, I don’t want this post to become a tome or a post of static code analysis. The above discussions gives a birds’ eye view of the flow of logic in the library.

These algorithms are also among the top choices for many data scientists.

So when to use it?

Interestingly, these algorithms are also among the top choices for many data scientists. They also automate their parameter tuning using GridSearchCV or other algorithms. However, their approach would be more specific to their industry and focus on the present and future directions of the company.

AutoML is a very handy tool when one has no idea of the direction one has to take or a newbie. Or to come with a low effort study on the viability of a project.

That is, AutoML replaces experience and knowledge with the computational cost.

If you are adopting some automated ML frameworks beware of these:

AutoML reduces time by parallelizing the workloads. This may cause problems in architecting for production

The default parameters search space provided may not be optimal for your application. There is no quantitative proof, that this search space is relevant for all problems.

Might help a data scientist/student, who wants to compare a new algorithm.

You got to take steps to prevent these frameworks from stifling innovation at your place.

Data scientists do a lot more than trying different models. I spent a lot time comparing time series models during my post-doctoral research. The works would show the effort we took in understanding the data uncertainties. The knowledge of these uncertainties play a great role in building a robust system. These frameworks, at the most replace experience with computational cost. In platforms like AWS and Google, the cost of this search could be exorbitant.

Comments